28 breaking releases

| 0.29.0 | Mar 28, 2025 |

|---|---|

| 0.27.0 | Jan 28, 2025 |

| 0.26.0 | Dec 30, 2024 |

| 0.24.0 | Nov 25, 2024 |

| 0.8.0 | Mar 21, 2023 |

#44 in Command line utilities

631 downloads per month

1.5MB

16K

SLoC

AIChat: All-in-one LLM CLI Tool

AIChat is an all-in-one LLM CLI tool featuring Shell Assistant, CMD & REPL Mode, RAG, AI Tools & Agents, and More.

Install

Package Managers

- Rust Developers:

cargo install aichat - Homebrew/Linuxbrew Users:

brew install aichat - Pacman Users:

pacman -S aichat - Windows Scoop Users:

scoop install aichat - Android Termux Users:

pkg install aichat

Pre-built Binaries

Download pre-built binaries for macOS, Linux, and Windows from GitHub Releases, extract them, and add the aichat binary to your $PATH.

Features

Multi-Providers

Integrate seamlessly with over 20 leading LLM providers through a unified interface. Supported providers include OpenAI, Claude, Gemini (Google AI Studio), Ollama, Groq, Azure-OpenAI, VertexAI, Bedrock, Github Models, Mistral, Deepseek, AI21, XAI Grok, Cohere, Perplexity, Cloudflare, OpenRouter, Ernie, Qianwen, Moonshot, ZhipuAI, Lingyiwanwu, MiniMax, Deepinfra, VoyageAI, any OpenAI-Compatible API provider.

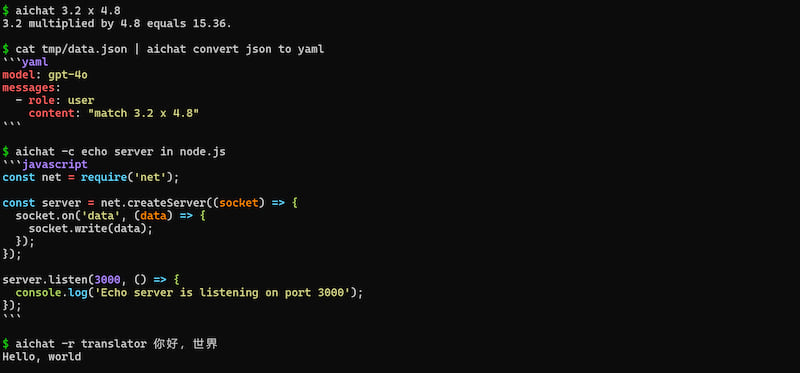

CMD Mode

Explore powerful command-line functionalities with AIChat's CMD mode.

REPL Mode

Experience an interactive Chat-REPL with features like tab autocompletion, multi-line input support, history search, configurable keybindings, and custom REPL prompts.

Shell Assistant

Elevate your command-line efficiency. Describe your tasks in natural language, and let AIChat transform them into precise shell commands. AIChat intelligently adjusts to your OS and shell environment.

Multi-Form Input

Accept diverse input forms such as stdin, local files and directories, and remote URLs, allowing flexibility in data handling.

| Input | CMD | REPL |

|---|---|---|

| CMD | aichat hello |

|

| STDIN | cat data.txt | aichat |

|

| Last Reply | .file %% |

|

| Local files | aichat -f image.png -f data.txt |

.file image.png data.txt |

| Local directories | aichat -f dir/ |

.file dir/ |

| Remote URLs | aichat -f https://example.com |

.file https://example.com |

| External commands | aichat -f '`git diff`' |

.file `git diff` |

| Combine Inputs | aichat -f dir/ -f data.txt explain |

.file dir/ data.txt -- explain |

Role

Customize roles to tailor LLM behavior, enhancing interaction efficiency and boosting productivity.

The role consists of a prompt and model configuration.

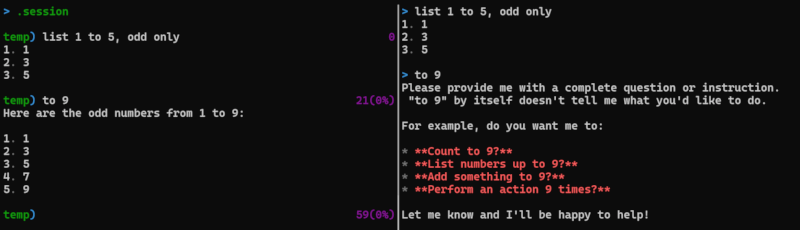

Session

Maintain context-aware conversations through sessions, ensuring continuity in interactions.

The left side uses a session, while the right side does not use a session.

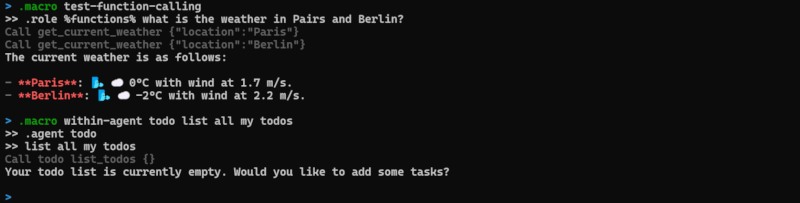

Macro

Streamline repetitive tasks by combining a series of REPL commands into a custom macro.

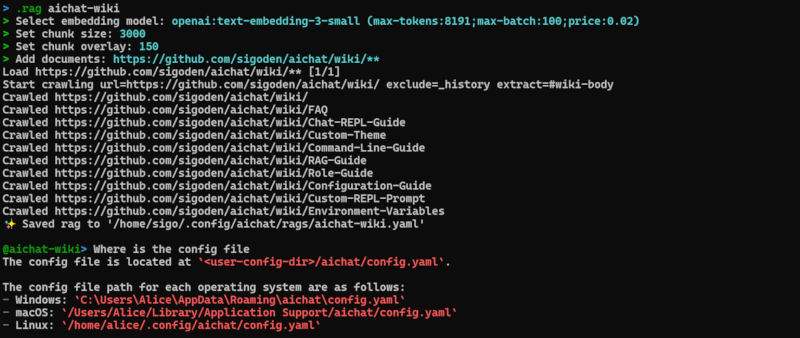

RAG

Integrate external documents into your LLM conversations for more accurate and contextually relevant responses.

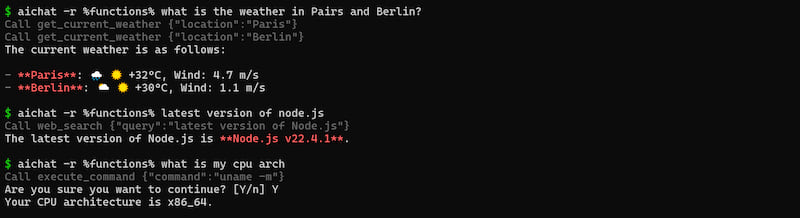

Function Calling

Function calling supercharges LLMs by connecting them to external tools and data sources. This unlocks a world of possibilities, enabling LLMs to go beyond their core capabilities and tackle a wider range of tasks.

We have created a new repository https://github.com/sigoden/llm-functions to help you make the most of this feature.

AI Tools

Integrate external tools to automate tasks, retrieve information, and perform actions directly within your workflow.

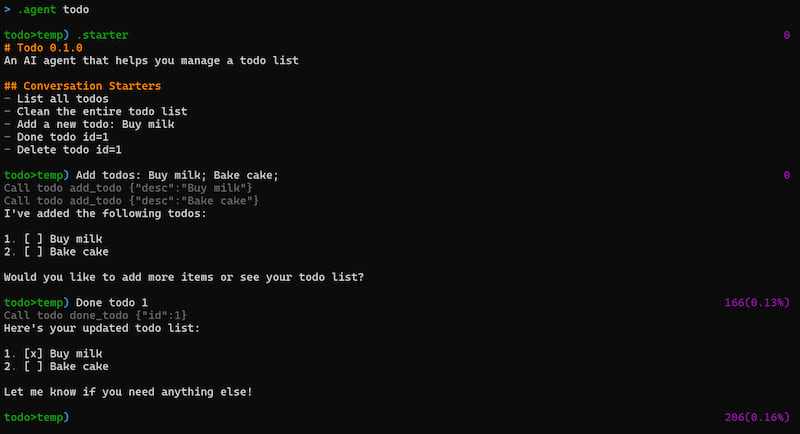

AI Agents (CLI version of OpenAI GPTs)

AI Agent = Instructions (Prompt) + Tools (Function Callings) + Documents (RAG).

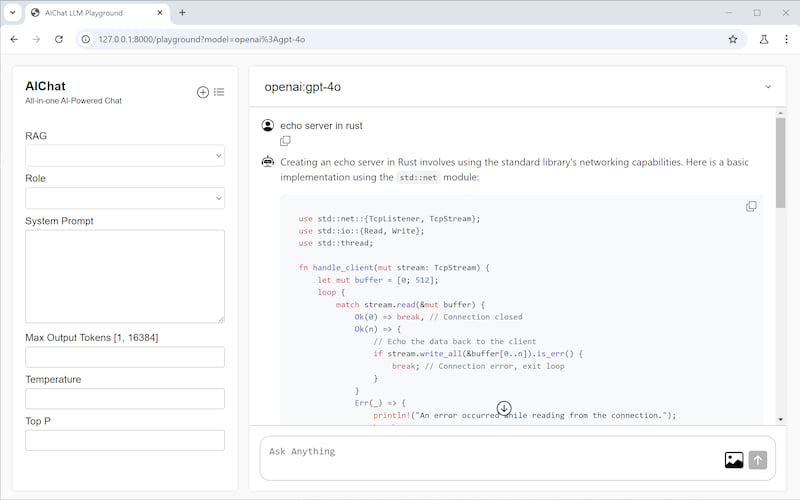

Local Server Capabilities

AIChat includes a lightweight built-in HTTP server for easy deployment.

$ aichat --serve

Chat Completions API: http://127.0.0.1:8000/v1/chat/completions

Embeddings API: http://127.0.0.1:8000/v1/embeddings

Rerank API: http://127.0.0.1:8000/v1/rerank

LLM Playground: http://127.0.0.1:8000/playground

LLM Arena: http://127.0.0.1:8000/arena?num=2

Proxy LLM APIs

The LLM Arena is a web-based platform where you can compare different LLMs side-by-side.

Test with curl:

curl -X POST -H "Content-Type: application/json" -d '{

"model":"claude:claude-3-5-sonnet-20240620",

"messages":[{"role":"user","content":"hello"}],

"stream":true

}' http://127.0.0.1:8000/v1/chat/completions

LLM Playground

A web application to interact with supported LLMs directly from your browser.

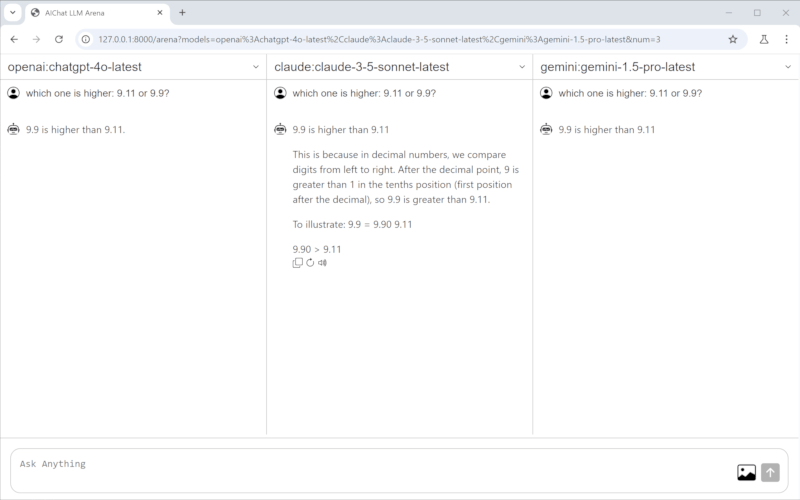

LLM Arena

A web platform to compare different LLMs side-by-side.

Custom Themes

AIChat supports custom dark and light themes, which highlight response text and code blocks.

Documentation

- Chat-REPL Guide

- Command-Line Guide

- Role Guide

- Macro Guide

- RAG Guide

- Environment Variables

- Configuration Guide

- Custom Theme

- Custom REPL Prompt

- FAQ

License

Copyright (c) 2023-2025 aichat-developers.

AIChat is made available under the terms of either the MIT License or the Apache License 2.0, at your option.

See the LICENSE-APACHE and LICENSE-MIT files for license details.

Dependencies

~40–78MB

~1.5M SLoC