33 releases (stable)

| 2.6.2 | Nov 23, 2024 |

|---|---|

| 2.5.4 | Oct 25, 2024 |

| 1.1.0 | Aug 28, 2024 |

| 0.3.0 | Aug 24, 2024 |

| 0.1.5 | Jul 3, 2024 |

#51 in Machine learning

Used in 8 crates

(2 directly)

400KB

8K

SLoC

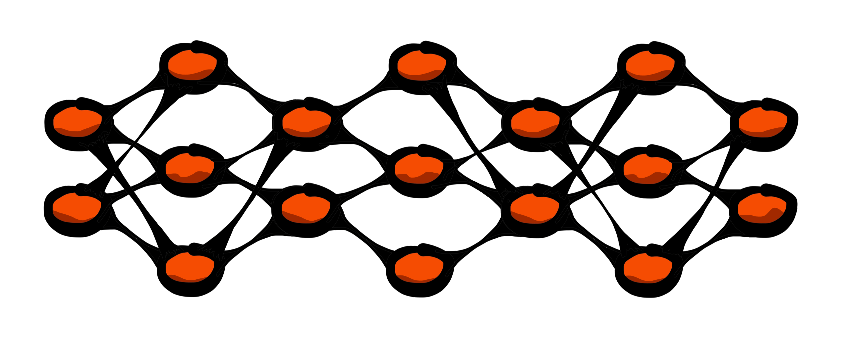

neurons is a neural network library written from scratch in Rust. It provides a flexible and efficient way to build, train, and evaluate neural networks. The library is designed to be modular, allowing for easy customization of network architectures, activation functions, objective functions, and optimization techniques.

Jump to

Features

Modular design

- Ready-to-use dense, convolutional and maxpool layers.

- Inferred input shapes when adding layers.

- Easily specify activation functions, biases, and dropout.

- Customizable objective functions and optimization techniques.

- Feedback loops and -blocks for more advanced architectures.

- Skip connections with simple accumulation specification.

- Much more!

Fast

- Leveraging Rust's performance and parallelization capabilities.

Everything built from scratch

- Only dependencies are

rayonandplotters.

Whereplottersonly is used through some of the examples (thus optional).Various examples showcasing the capabilities

- Located in the

examples/directory. With subdirectories for various tasks, showcasing the different architectures and techniques.

The package

The package is divided into separate modules, each containing different parts of the library, everything being connected through the network.rs module.

Core

tensor.rs

Describes the custom tensor struct and its operations.

A tensor is here divided into four main types:

Single: One-dimensional data (Vec<_>).Double: Two-dimensional data (Vec<Vec<_>>).Triple: Three-dimensional data (Vec<Vec<Vec<_>>>).Quadruple: Four-dimensional data (Vec<Vec<Vec<Vec<_>>>>).And further into two additional helper-types:

Quintuple: Five-dimensional data (Vec<Vec<Vec<Vec<Vec<(usize, usize)>>>>>). Used to hold maxpool indices.Nested: A nested tensor (Vec<Tensor>). Used through feedback blocks.Each shape following the same pattern of operations, but with increasing dimensions.

Thus, every tensor contains information about its shape and data.

The reason for wrapping the data in this way is to easily allow for dynamic shapes and types in the network.random.rs

Functionality for random number generation.

Used when initializing the weights of the network.network.rs

Describes the network struct and its operations.

The network contains a vector of layers, an optimizer, and an objective function.

The network is built layer by layer, and then trained using thelearnfunction.

See quickstart or theexamples/directory for more information.

Layers

dense.rs

Describes the dense layer and its operations.

convolution.rs

Describes the convolutional layer and its operations.

If the input is a tensor of shapeSingle, the layer will automatically reshape it into aTripletensor.deconvolution.rs

Describes the deconvolutional layer and its operations.

If the input is a tensor of shapeSingle, the layer will automatically reshape it into aTripletensor.maxpool.rs

Describes the maxpool layer and its operations.

If the input is a tensor of shapeSingle, the layer will automatically reshape it into aTripletensor.feedback.rs

Describe the feedback block and its operations.

Functions

activation.rs

Contains all the possible activation functions to be used.

objective.rs

Contains all the possible objective functions to be used.

optimizer.rs

Contains all the possible optimization techniques to be used.

Examples

plot.rs

Contains the plotting functionality for the examples.

Quickstart

use neurons::{activation, network, objective, optimizer, tensor};

fn main() {

// New feedforward network with input shape (1, 28, 28)

let mut network = network::Network::new(tensor::Shape::Triple(1, 28, 28));

// Convolution(filters, kernel, stride, padding, dilation, activation, Some(dropout))

network.convolution(5, (3, 3), (1, 1), (1, 1), (1, 1), activation::Activation::ReLU, None);

// Maxpool(kernel, stride)

network.maxpool((2, 2), (2, 2));

// Dense(outputs, activation, bias, Some(dropout))

network.dense(100, activation::Activation::ReLU, false, None);

// Dense(outputs, activation, bias, Some(dropout))

network.dense(10, activation::Activation::Softmax, false, None);

network.set_optimizer(optimizer::RMSprop::create(

0.001, // Learning rate

0.0, // Alpha

1e-8, // Epsilon

Some(0.01), // Decay

Some(0.01), // Momentum

true, // Centered

));

network.set_objective(

objective::Objective::MSE, // Objective function

Some((-1f32, 1f32)) // Gradient clipping

);

println!("{}", network); // Display the network

let (x, y) = { }; // Add your data here

let validation = (

x_val, // Validation data

y_val, // Validation labels

5 // Stop if val loss decreases for 5 epochs

);

let batch = 32; // Minibatch size

let epochs = 100; // Number of epochs

let print = Some(10); // Print every 10th epoch

let (train_loss, val_loss, val_acc) = network.learn(x, y, validation, batch, epochs, print);

}

Releases

v2.6.2 – Fixed-size vectors and reduced redundant cloning.

Use fixed-size vectors where possible to increase performance.

Modify tensor operations to both utilise parallelisation and reduce redundant cloning.

Reduce general redundant cloning in the network etc.

Benchmarking benches/benchmark.rs (mnist version):

- v2.6.2: 19.826974433s (1.92x speedup from v2.6.1)

- v2.6.1: 38.140101795s (2.31x slowdown from v2.0.1)

- v2.0.1: 16.504570304s

Note: v2.0.1 is massively outdated wrt. modularity etc., reflected through benchmarking.

v2.6.1 – Bugs and visualisation.

- Fix bugs concerning expanded feedback approach 1.

- Improved results visualisation.

v2.6.0 – Feedback approach 1.

- Improved implementation of approach 1 feedback connections.

- Add possibility of selecting iteration count.

- Improve averaging; now takes a vector of tensors.

v2.5.5 – Comparison and timing.

- Added timing functionality.

- Improve comparison functionality.

v2.5.4 – Bugs and examples.

- Fix a typo in

AdamandAdamWoptimizers. - Updated examples.

- Updated comparisons.

v2.5.3 – Architecture comparison.

Added examples comparing the performance off different architectures.

Probes the final network by turning of skips and feedbacks, etc.

examples/compare/*

Corresponding plotting functionality.

documentation/comparison.py

v2.5.2 – Bug extermination and expanded examples.

- Fix bug related to skip connections.

- Fix bug related to validation forward pass.

- Expanded examples.

- Improve feedback block.

v2.5.1 – Convolution dilation.

Add dilation to the convolution layer.

v2.5.0 – Deconvolution layer.

Initial implementation of the deconvolution layer.

Created with the good help of the GitHub Copilot.

Validated against corresponding PyTorch implementation;

documentation/validation/deconvolution.py

v2.4.1 – Bug-fixes.

Minor bug-fixes and example expansion.

v2.4.0 – Feedback blocks.

Thorough expansion of the feedback module.

Feedback blocks automatically handle weight coupling and skip connections.

When defining a feedback block in the network's layers, the following syntax is used:

network.feedback(

vec![feedback::Layer::Convolution(

1,

activation::Activation::ReLU,

(3, 3),

(1, 1),

(1, 1),

None,

)],

2,

true,

feedback::Accumulation::Mean,

);

v2.3.0 – Skip connection.

Add possibility of skip connections.

Limitations:

- Only works between equal shapes.

- Backward pass assumes an identity mapping (gradients are simply added).

v2.2.0 – Selectable scaling wrt. loopbacks.

Add possibility of selecting the scaling function.

tensor::Scalefeedback::AccumulationSee implementations of the above for more information.

v2.1.0 – Maxpool tensor consistency.

Update maxpool logic to ensure consistency wrt. other layers.

Maxpool layers now return a tensor::Tensor (of shape tensor::Shape::Quintuple), instead of nested Vecs.

This will lead to consistency when implementing maxpool for feedback blocks.

v2.0.5 – Bug fixes and renaming.

Minor bug fixes to feedback connections.

Rename simple feedback connections to loopback connections for consistency.

v2.0.4 – Initial feedback block structure.

Add skeleton for feedback block structure. Missing correct handling of backward pass.

How should the optimizer be handled (wrt. buffer, etc.)?

v2.0.3 - Improved optimizer creation.

Before:

network.set_optimizer(

optimizer::Optimizer::AdamW(

optimizer::AdamW {

learning_rate: 0.001,

beta1: 0.9,

beta2: 0.999,

epsilon: 1e-8,

decay: 0.01,

// To be filled by the network:

momentum: vec![],

velocity: vec![],

}

)

);

Now:

network.set_optimizer(optimizer::RMSprop::create(

0.001, // Learning rate

0.0, // Alpha

1e-8, // Epsilon

Some(0.01), // Decay

Some(0.01), // Momentum

true, // Centered

));

v2.0.2 – Improved compatability of differing layers.

Layers now automatically reshape input tensors to the correct shape. I.e., your network could be conv->dense->conv etc. Earlier versions only allowed conv/maxpool->dense connections.

Note: While this is now possible, some testing proved this to be suboptimal in terms of performance.

v2.0.1 – Optimized optimizer step.

Combines operations to single-loop instead of repeadedly iterating over the tensor::Tensor's.

Benchmarking benches/benchmark.rs (mnist version):

- v2.0.1: 16.504570304s (1.05x speedup)

- v2.0.0: 17.268632412s

v2.0.0 – Fix batched weight updates.

Weight updates are now batched correctly.

See network::Network::learn for details.

Benchmarking examples/example_benchmark.rs (mnist version):

- batched (128): 17.268632412s (4.82x speedup)

- unbatched (1): 83.347593292s

v1.1.0 – Improved optimizer step.

Optimizer step more intuitive and easy to read.

Using tensor::Tensor instead of manually handing vectors.

v1.0.0 – Fully working integrated network.

Network of convolutional and dense layers works.

v0.3.0 – Batched training; parallelization.

Batched training (network::Network::learn).

Parallelization of batches (rayon).

Benchmarking examples/example_benchmark.rs (iris version):

- v0.3.0: 0.318811179s (6.95x speedup)

- v0.2.2: 2.218362758s

v0.2.2 – Convolution.

Convolutional layer. Improved documentation.

v0.2.0 – Feedback.

Initial feedback connection implementation.

v0.1.5 – Improved documentation.

Improved documentation.

v0.1.1 – Custom tensor struct.

Custom tensor struct. Unit tests.

v0.1.0 – Dense.

Dense feedforward network. Activation functions. Objective functions. Optimization techniques.

Progress

Layer types

- Dense

- Convolution

- Forward pass

- Padding

- Stride

- Dilation

- Backward pass

- Padding

- Stride

- Dilation

- Forward pass

- Deconvolution (#22)

- Forward pass

- Padding

- Stride

- Dilation

- Backward pass

- Padding

- Stride

- Dilation

- Forward pass

- Max pooling

- Feedback

Activation functions

- Linear

- Sigmoid

- Tanh

- ReLU

- LeakyReLU

- Softmax

Objective functions

- AE

- MAE

- MSE

- RMSE

- CrossEntropy

- BinaryCrossEntropy

- KLDivergence

Optimization techniques

- SGD

- SGDM

- Adam

- AdamW

- RMSprop

- Minibatch

Architecture

- Feedforward (dubbed

Network) - Feedback loops

- Skip connections

- Feedback blocks

- Recurrent

Feedback

- Feedback connection

- Selectable gradient scaling

- Selectable gradient accumulation

- Feedback block

- Selectable weight coupling

Regularization

- Dropout

- Early stopping

- Batch normalization

Parallelization

- Parallelization of batches

- Other parallelization?

- NOTE: Slowdown when parallelizing everything (commit: 1f94cea56630a46d40755af5da20714bc0357146).

Testing

- Unit tests

- Thorough testing of activation functions

- Thorough testing of objective functions

- Thorough testing of optimization techniques

- Thorough testing of feedback blocks

- Integration tests

- Network forward pass

- Network backward pass

- Network training (i.e., weight updates)

Examples

- XOR

- Iris

- FTIR

- MLP

- Plain

- Skip

- Looping

- Feedback

- CNN

- Plain

- Skip

- Looping

- Feedback

- MLP

- MNIST

- CNN

- CNN + Skip

- CNN + Looping

- CNN + Feedback

- Fashion-MNIST

- CNN

- CNN + Skip

- CNN + Looping

- CNN + Feedback

- CIFAR-10

- CNN

- CNN + Skip

- CNN + Looping

- CNN + Feedback

Other

- Documentation

- Custom random weight initialization

- Custom tensor type

- Plotting

- Data from file

- General data loading functionality

- Custom icon/image for documentation

- Custom stylesheet for documentation

- Add number of parameters when displaying

Network - Network type specification (e.g. f32, f64)

- Serialisation (saving and loading)

- Single layer weights

- Entire network weights

- Custom (binary) file format, with header explaining contents

- Logging

Resources

Sources

- backpropagation

- softmax

- momentum

- Adam

- AdamW

- RMSprop

- convolution 1

- convolution 2

- convolution 3

- skip connection

- feedback 1

- feedback 2

- transposed convolution

Tools used

Profiling

To profile, install flamegraph:

cargo install flamegraph

and include

[profile.release]

debug = true

in the Cargo.toml file.

Then run the following command:

sudo cargo flamegraph --example {{ EXAMPLE }} --release

with {{ EXAMPLE }} being the name of the example you want to profile (e.g., benchmark).

Inspiration

Dependencies

~5.5–7.5MB

~134K SLoC