5 releases

| 0.1.4 | Feb 15, 2024 |

|---|---|

| 0.1.3 | Feb 2, 2024 |

| 0.1.2 | Feb 2, 2024 |

| 0.1.1 | Jan 7, 2024 |

| 0.1.0 | Jan 2, 2024 |

#1472 in Database interfaces

120KB

2.5K

SLoC

roster

Replacement of Redis with Rust & io-uring

roster is an in-memory data store which is aiming to provide a fully

comptabile redis APIs.

It is more like an expirement right now on, feel free to contribute. Some of the

initial code involving the resp protocol comes from mini-redis.

The current work is to have a good fundation to be able to build the Redis Protocol on top of it.

Benchmarks

If you want some benchmarks, feel free to check on: benchmarks;

The redis APIs are not properly implemented and only some basics things are present so those benchmarks are only to check that the decisions made for the storage & the I/O are good.

Those will be updated as implementations progress.

Benchmarks are made between Redis, Dragonfly & Roster.

ENT1-M Scaleway

First benchmarks are based on an ENT1-M Scaleway which is a decent instance but not a really big one and we are limited a lot by the instance and the network between our two instances, as for Redis & Dragonfly.

- 16 vCPUS

- RAM: 64G

- BW: 3,2 Gbps

Protocol

Reddis

- Only the RESP3 is wanted for now.

The full list of implemented commands can be checked here.

Architecture

Performance

To be able to max out performances out of an application we must be able to have a linear-scalability.[^1] Usual issues around scalability are when you share data between threads, (like false-sharing[^3]).

To solve this issue we use an scc::Hashmap which is a really efficient datastructure. This datastructure can be shared across multiple thread without having a loss in performance expect when have too many thread. When this happens, we'll partition the storage by load-balancing TCP connection on those threads when there is a need to.

We also use a runtime which is based on io-uring to handle every I/O on the

application: monoio.

[^1]: It means if we have an application running 100 op/s on one thread, if we add another one, we should be at 200 op/s. We have a linear scalability. (or a near linear scalability). [^3]: An excellent article explaining it: alic.dev.

In the same spirit as a shared nothing architecture we use one thread per core to maximize ressources available on the hardware.

"Application tail latency is critical for services to meet their latency expectations. We have shown that the thread-per-core approach can reduce application tail latency of a key-value store by up to 71% compared to baseline Memcached running on commodity hardware and Linux."[^2]

[^2]: The Impact of Thread-Per-Core Architecture on Application Tail Latency

Storage

For the storage, instead of having each thread handling his part of the storage with a load balancing based on TCP connection, it seems it's more efficient to have a storage shared between a number of threads.

We split the whole application into a number of Storage Segment which are shared between a fixed number of thread.

References

- RESP3

- https://github.com/tair-opensource/compatibility-test-suite-for-redis

- https://github.com/redis/redis-specifications

- https://github.com/redis/redis-benchmarks-specification

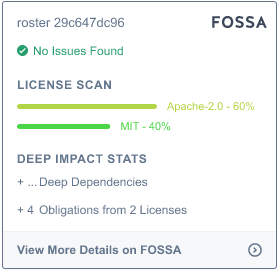

License

Dependencies

~16–28MB

~415K SLoC