49 releases

| 0.7.75 | Jul 8, 2024 |

|---|---|

| 0.7.72 | May 30, 2024 |

| 0.6.70 | Mar 25, 2024 |

| 0.6.68 | Jul 22, 2023 |

| 0.1.40 | Nov 23, 2022 |

#156 in Filesystem

61 downloads per month

3MB

57K

SLoC

Super Speedy Syslog Searcher! (s4)

Speedily search and merge log messages by datetime.

Super Speedy Syslog Searcher (s4) is a command-line tool to search

and merge varying log messages from varying log files, sorted by datetime.

Datetime filters may be passed to narrow the search to a datetime range.

s4 can read standardized log message formats like RFC 3164 and RFC 5424

("syslog"),

Red Hat Audit logs, strace output, and can read many non-standardized ad-hoc log

message formats, including multi-line log messages.

It also parses binary accounting records acct, lastlog, and utmp

(acct, pacct, lastlog, utmp, utmpx, wtmp),

systemd journal logs (.journal), and Microsoft Event Logs (.evtx).

s4 can read logs that are compressed (.bz2, .gz, .lz4, .xz), or archived logs (.tar).

s4 aims to be very fast.

- Use

- About

- More

- logging chaos: the problem

s4solves - Further Reading

- Stargazers

Use

Install super_speedy_syslog_searcher

Assuming rust is installed, run

cargo install --locked super_speedy_syslog_searcher

A C compiler is required.

allocator mimalloc or jemalloc

The default alloctor is the System allocator.

Allocator mimalloc is feature mimalloc and allocator jemalloc is feature jemalloc.

Allocator mimalloc is the fastest according to mimalloc project benchmarks.

jemalloc is also very good.

mimalloc

cargo install --locked super_speedy_syslog_searcher --features mimalloc

Error Bus error is a known issue on some aarch64-unknown-linux-gnu systems.

$ s4 --version

Bus error

Either use jemalloc or the default System allocator.

jemalloc

cargo install --locked super_speedy_syslog_searcher --features jemalloc

Here are the packages for building super_speedy_syslog_searcher with jemalloc or mimalloc

on various Operating Systems.

Alpine

apk add gcc make musl-dev

Debian and Ubuntu

apt install gcc make libc6-dev

or

apt install build-essential

OpenSUSE

zypper install gcc glibc-devel make

Red Hat and CentOS

yum install gcc glibc-devel make

feature mimalloc on Windows

Compiling mimalloc on Windows requires lib.exe which is part of Visual Studio Build Tools.

Instructions at rustup.rs.

Run s4

For example, print all the log messages in syslog files under /var/log/

s4 /var/log

On Windows, print the ad-hoc logs under C:\Windows\Logs

s4.exe C:\Windows\Logs

On Windows, print all .log files under C:\Windows (with the help of Powershell)

Get-ChildItem -Filter '*.log' -File -Path "C:\Windows" -Recurse -ErrorAction SilentlyContinue `

| Select-Object -ExpandProperty FullName `

| s4.exe -

(note that UTF-16 encoded logs cannot be parsed, see Issue #16)

(note that opening too many files causes error too many files open, see Issue #270, so Get-ChildItem -Filter lessens the number of files opened by s4.exe)

On Windows, print the Windows Event logs

s4.exe C:\Windows\System32\winevt\Logs

Print the log messages after January 1, 2022 at 00:00:00

s4 /var/log -a 20220101

Print the log messages from January 1, 2022 00:00:00 to January 2, 2022

s4 /var/log -a 20220101 -b 20220102

or

s4 /var/log -a 20220101 -b @+1d

Print the log messages on January 1, 2022, from 12:00:00 to 16:00:00

s4 /var/log -a 20220101T120000 -b 20220101T160000

Print the record-keeping log messages from up to a day ago

(with the help of find)

find /var -xdev -type f \( \

-name 'lastlog' \

-or -name 'wtmp' \

-or -name 'wtmpx' \

-or -name 'utmp' \

-or -name 'utmpx' \

-or -name 'acct' \

-or -name 'pacct' \

\) \

2>/dev/null \

| s4 - -a=-1d

Print the journal log messages from up to an hour ago,

prepending the journal file name

(with the help of find)

find / -xdev -name '*.journal' -type f 2>/dev/null \

| s4 - -a=-1h -n

Print only the log messages that occurred two days ago

(with the help of GNU date)

s4 /var/log -a $(date -d "2 days ago" '+%Y%m%d') -b @+1d

Print only the log messages that occurred two days ago during the noon hour

(with the help of GNU date)

s4 /var/log -a $(date -d "2 days ago 12" '+%Y%m%dT%H%M%S') -b @+1h

Print only the log messages that occurred two days ago during the noon hour in

Bengaluru, India (timezone offset +05:30) and prepended with equivalent UTC

datetime (with the help of GNU date)

s4 /var/log -u -a $(date -d "2 days ago 12" '+%Y%m%dT%H%M%S+05:30') -b @+1h

--help

Speedily search and merge log messages by datetime.

DateTime filters may be passed to narrow the search.

s4 aims to be very fast.

Usage: s4 [OPTIONS] <PATHS>...

Arguments:

<PATHS>... Path(s) of log files or directories.

Directories will be recursed. Symlinks will be followed.

Paths may also be passed via STDIN, one per line. The user must

supply argument "-" to signify PATHS are available from STDIN.

Options:

-a, --dt-after <DT_AFTER>

DateTime Filter After: print log messages with a datetime that is at

or after this datetime. For example, "20200102T120000" or "-5d".

-b, --dt-before <DT_BEFORE>

DateTime Filter Before: print log messages with a datetime that is at

or before this datetime.

For example, "2020-01-03T23:00:00.321-05:30" or "@+1d+11h"

-t, --tz-offset <TZ_OFFSET>

Default timezone offset for datetimes without a timezone.

For example, log message "[20200102T120000] Starting service" has a

datetime substring "20200102T120000".

That datetime substring does not have a timezone offset

so this TZ_OFFSET value would be used.

Example values, "+12", "-0800", "+02:00", or "EDT".

To pass a value with leading "-" use "=" notation, e.g. "-t=-0800".

If not passed then the local system timezone offset is used.

[default: -07:00]

-z, --prepend-tz <PREPEND_TZ>

Prepend a DateTime in the timezone PREPEND_TZ for every line.

Used in PREPEND_DT_FORMAT.

-u, --prepend-utc

Prepend a DateTime in the UTC timezone offset for every line.

This is the same as "--prepend-tz Z".

Used in PREPEND_DT_FORMAT.

-l, --prepend-local

Prepend DateTime in the local system timezone offset for every line.

This is the same as "--prepend-tz +XX" where +XX is the local system

timezone offset.

Used in PREPEND_DT_FORMAT.

-d, --prepend-dt-format <PREPEND_DT_FORMAT>

Prepend a DateTime using the strftime format string.

If PREPEND_TZ is set then that value is used for any timezone offsets,

i.e. strftime "%z" "%:z" "%Z" values, otherwise the timezone offset value

is the local system timezone offset.

[Default: %Y%m%dT%H%M%S%.3f%z]

-n, --prepend-filename

Prepend file basename to every line.

-p, --prepend-filepath

Prepend file full path to every line.

-w, --prepend-file-align

Align column widths of prepended data.

--prepend-separator <PREPEND_SEPARATOR>

Separator string for prepended data.

[default: :]

--separator <LOG_MESSAGE_SEPARATOR>

An extra separator string between printed log messages.

Per log message not per line of text.

Accepts a basic set of backslash escape sequences,

e.g. "\0" for the null character, "\t" for tab, etc.

--journal-output <JOURNAL_OUTPUT>

The format for .journal file log messages.

Matches journalctl --output options.

[default: short]

[possible values: short, short-precise, short-iso, short-iso-precise,

short-full, short-monotonic, short-unix, verbose, export, cat]

-c, --color <COLOR_CHOICE>

Choose to print to terminal using colors.

[default: auto]

[possible values: always, auto, never]

--blocksz <BLOCKSZ>

Read blocks of this size in bytes.

May pass value as any radix (hexadecimal, decimal, octal, binary).

Using the default value is recommended.

Most useful for developers.

[default: 65536]

-s, --summary

Print a summary of files processed to stderr.

Most useful for developers.

-h, --help

Print help

-V, --version

Print version

Given a file path, the file format will be processed based on a best guess of

the file name.

If the file format is not guessed then it will be treated as a UTF8 text file.

Given a directory path, found file names that have well-known non-log file name

extensions will be skipped.

DateTime Filters may be strftime specifier patterns:

"%Y%m%dT%H%M%S*"

"%Y-%m-%d %H:%M:%S*"

"%Y-%m-%dT%H:%M:%S*"

"%Y/%m/%d %H:%M:%S*"

"%Y%m%d"

"%Y-%m-%d"

"%Y/%m/%d"

"+%s"

Each * is an optional trailing 3-digit fractional sub-seconds,

or 6-digit fractional sub-seconds, and/or timezone.

Pattern "+%s" is Unix epoch timestamp in seconds with a preceding "+".

For example, value "+946684800" is be January 1, 2000 at 00:00, GMT.

DateTime Filters may be custom relative offset patterns:

"+DwDdDhDmDs" or "-DwDdDhDmDs"

"@+DwDdDhDmDs" or "@-DwDdDhDmDs"

Custom relative offset pattern "+DwDdDhDmDs" and "-DwDdDhDmDs" is the offset

from now (program start time) where "D" is a decimal number.

Each lowercase identifier is an offset duration:

"w" is weeks, "d" is days, "h" is hours, "m" is minutes, "s" is seconds.

For example, value "-1w22h" is one week and twenty-two hours in the past.

Value "+30s" is thirty seconds in the future.

Custom relative offset pattern "@+DwDdDhDmDs" and "@-DwDdDhDmDs" is relative

offset from the other datetime.

Arguments "-a 20220102 -b @+1d" are equivalent to "-a 20220102 -b 20220103".

Arguments "-a @-6h -b 20220101T120000" are equivalent to

"-a 20220101T060000 -b 20220101T120000".

Without a timezone, the Datetime Filter is presumed to be the local

system timezone.

Command-line passed timezones may be numeric timezone offsets,

e.g. "+09:00", "+0900", or "+09", or named timezone offsets, e.g. "JST".

Ambiguous named timezones will be rejected, e.g. "SST".

--prepend-tz and --dt-offset function independently:

--dt-offset is used to interpret processed log message datetime stamps that

do not have a timezone offset.

--prepend-tz affects what is pre-printed before each printed log message line.

--separator accepts backslash escape sequences:

"\0", "\a", "\b", "\e", "\f", "\n", "\r", "\\", "\t", "\v"

Resolved values of "--dt-after" and "--dt-before" can be reviewed in

the "--summary" output.

s4 uses file naming to determine the file type.

s4 can process files compressed and named .bz2, .gz, .lz4, .xz, and files

archived within a .tar file.

Log messages from different files with the same datetime are printed in order

of the arguments from the command-line.

Datetimes printed for .journal file log messages may differ from datetimes

printed by program journalctl.

See Issue #101

DateTime strftime specifiers are described at

https://docs.rs/chrono/latest/chrono/format/strftime/

DateTimes supported are only of the Gregorian calendar.

DateTimes supported language is English.

Further background and examples are at the project website:

https://github.com/jtmoon79/super-speedy-syslog-searcher/

Is s4 failing to parse a log file? Report an Issue at

https://github.com/jtmoon79/super-speedy-syslog-searcher/issues/new/choose

About

Why s4?

Super Speedy Syslog Searcher (s4) is meant to aid Engineers in reviewing

varying log files in a datetime-sorted manner.

The primary use-case is to aid investigating problems wherein the time of

a problem occurrence is known and there are many available logs

but otherwise there is little source evidence.

Currently, log file formats vary widely. Most logs are an ad-hoc format. Even separate log files on the same system for the same service may have different message formats! Sorting these logged messages by datetime may be prohibitively difficult. The result is an engineer may have to "hunt and peck" among many log files, looking for problem clues around some datetime; so tedious!

Enter Super Speedy Syslog Searcher 🦸 ‼

s4 will print log messages from multiple log files in datetime-sorted order.

A "window" of datetimes may be passed, to constrain the period of printed

messages. This will assist an engineer that, for example, needs to view all

log messages that occurred two days ago between 12:00 and 12:05 among log files taken from multiple

systems.

The ulterior motive for Super Speedy Syslog Searcher was the primary developer wanted an excuse to learn rust 🦀, and wanted to create an open-source tool for a recurring need of some Software Test Engineers 😄

See the real-world example rationale in the section below,

logging chaos: the problem s4 solves.

Features

- Parses:

- Ad-hoc log messages using formal datetime formats:

- Internet Message Format (RFC 2822)

e.g. Wed, 1 Jan 2020 22:00:00 PST message… - The BSD syslog Protocol (RFC 3164)

e.g. <8>Jan 1 22:00:00 message… - Date and Time on the Internet: Timestamps (RFC 3339)

e.g. 2020-01-01T22:00:00-08:00 message… - The Syslog Protocol (RFC 5424)

e.g. 2020-01-01T22:00:00-08:00 message… - ISO 8601

e.g. 2020-01-01T22:00:00-08:00 message…, 20200101T220000-0800 message…, etc. [1]

- Internet Message Format (RFC 2822)

- Red Hat Audit Log files

- strace output files with options

-tttor--timestamps, i.e. Unix epoch plus optional milliseconds, microseconds, or nanoseconds - binary user accounting records files

(

acct,pacct,lastlog,utmp,utmpx) from multiple Operating Systems and CPU architectures - binary Windows Event Log files

- binary systemd journal files with printing options matching

journalctl - many varying text log messages with ad-hoc datetime formats

- multi-line log messages

- Ad-hoc log messages using formal datetime formats:

- Inspects

.tararchive files for parseable log files [2] - Can process

.bz2,.gz,.lz4, or.xzcontaining log files. - Tested against "in the wild" log files from varying sources

(see project path

./logs/) - Prepends datetime and file paths, for easy programmatic parsing or visual traversal of varying log messages

- Comparable speed as GNU

grepandsort - Processes invalid UTF-8

- Accepts arbitrarily large files see Hacks

File name guessing

Given a file path, s4 will attempt to parse it. The type of file must be in

the name. Guesses are made about files with non-standard names.

For example, standard file name utmp will always be treated as a utmp record

file. But non-standard name log.utmp.1 is guessed to be a utmp record file.

Similar guesses are applied to lastlog, wtmp, acct, pacct,

journal, and evtx files.

When combined with compression or archive file name extensions,

e.g. .bz2, .gz, .lz4, or .xz, then s4 makes a best attempt at

guessing the compression or archive type and the file within the archive based

on the name.

For example, user.journal.gz is guessed to be a systemd journal file within a

gzip compressed file. However, if that same file is named something unusual like

user.systemd-journal.gz then it is guessed to be a text log file within a gzip

compressed file.

When a file type cannot be guessed then it is treated as a UTF8 text log file.

For example, a file name just unknown is not any obvious type so it is attempted

to be parsed as a UTF8 ad-hoc text log file.

tar files are inspected for parseable files.[2]

Directory walks

Given a directory path, s4 will walk the directory and all subdirectories and

follow symbolic links and cross file system paths.

s4 will ignore files with extensions that are known to be non-log files.

For example, files with extensions .dll, .mp3, .png, or .so, are

unlikely to be log files and so are not processed.

So given a file /tmp/file.mp3, an invocation of s4 /tmp will not attempt

to process file.mp3. An invocation of s4 /tmp/file.mp3 will attempt to

process file.mp3. It will be treated as a UTF8 text log file.

Limitations

- Only processes UTF-8 or ASCII encoded syslog files. (Issue #16)

- Cannot process multi-file

.gzfiles (only processes first stream found). (Issue #8) - Cannot process multi-file

.xzfiles (only processes first stream found). (Issue #11) - Cannot process

.ziparchives (Issue #39) - [1] ISO 8601

- ISO 8601 forms recognized (using ISO descriptive format)

YYYY-MM-DDThh:mm:ssYYYY-MM-DDThhmmssYYYYMMDDThhmmss(may use date-time separator character'T'or character blank space' ')

- ISO 8601 forms not recognized:

- Absent seconds

- Ordinal dates, i.e. "day of the year", format

YYYY-DDD, e.g."2022-321" - Week dates, i.e. "week-numbering year", format

YYYY-Www-D, e.g."2022-W25-1" - times without minutes and seconds (i.e. only

hh)

- ISO 8601 forms recognized (using ISO descriptive format)

- [2] Cannot process archive files or compressed files within

other archive files or compressed files (Issue #14)

e.g. cannot processlogs.tar.xz, nor filelog.gzwithinlogs.tar

Hacks

- Entire

.bz2files are read once before processing (Issue #300) - Entire

.lz4files are read once before processing (Issue #293) - Entire

.xzfiles are read into memory before printing (Issue #12) - Entire

.evtxfiles are read into memory before printing (Issue #86) - Entire files within a

.tarfile are read into memory before printing (Issue #13) - Entire user accounting record files are read into memory before printing

- Compressed

.journaland.evtxfiles are extracted to a temporary file (Issue #284)

More

Comparisons

An overview of features of varying log mergers including GNU tools.

- GNU

greppiped to GNUsort - Super Speedy Syslog Searcher;

s4 - logmerger;

logmerger - Toolong;

tl - logdissect;

logdissect.py

| Symbol | |

|---|---|

| ✔ | Yes |

| ⬤ | Most |

| ◒ | Some |

| ✗ | No |

| ☐ | with an accompanying GNU program |

| ! | with user input |

| ‼ | with complex user input |

General Features

| Program | Source | CLI | TUI | Interactive | live tail | merge varying log formats | datetime search range |

|---|---|---|---|---|---|---|---|

grep | sort |

C | ✔ | ✗ | ✗ | ☐ tail |

✗ | ‼ |

s4 |

Rust | ✔ | ✗ | ✗ | ✗ | ✔ | ✔ |

logmerger |

Python | ✔ | ✔ | ✔ | ✗ | ‼ | ✔ |

tl |

Python | ✔ | ✔ | ✔ | ✔ | ✗ | ✗ |

logdissect.py |

Python | ✔ | ✗ | ✗ | ✗ | ✗ | ✗ |

Formal Log DateTime Supported

| Program | RFC 2822 | RFC 3164 | RFC 3339 | RFC 5424 | ISO 8601 |

|---|---|---|---|---|---|

grep | sort |

✗ | ‼ | ! | ! | ! |

s4 |

✔ | ✔ | ✔ | ✔ | ✔ |

logmerger |

✗ | ✗ | ! | ! | ◒ |

tl |

✗ | ✗ | ✔ | ✔ | ✔ |

Other Log or File Formats Supported

| Program | Ad-hoc text formats | Red Hat Audit Log | journal | acct/lastlog/utmp |

.evtx |

.pcap/.pcapng |

.jsonl |

strace |

|---|---|---|---|---|---|---|---|---|

grep | sort |

‼ | ! | ✗ | ✗ | ✗ | ✗ | ✗ | ✔ |

s4 |

✔ | ✔ | ✔ | ✔ | ✔ | ✗ | ✔ | ✔ |

logmerger |

‼ | ‼ | ✗ | ✗ | ✗ | ✔ | ✗ | ✔ |

tl |

✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✔ | ✗ |

Archive Formats Supported

| Program | .gz |

.lz4 |

.bz |

.bz2 |

.xz |

.tar |

.zip |

|---|---|---|---|---|---|---|---|

grep | sort |

☐ zgrep |

☐ lz4 |

☐ bzip |

☐ bzip2 |

☐ xz |

✗ | ✗ |

s4 |

✔ | ✔ | ✗ | ✔ | ✔ | ✔ | ✗ |

logmerger |

✔ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

tl |

✔ | ✗ | ✔ | ✔ | ✗ | ✗ | ✗ |

logdissect.py |

✔ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

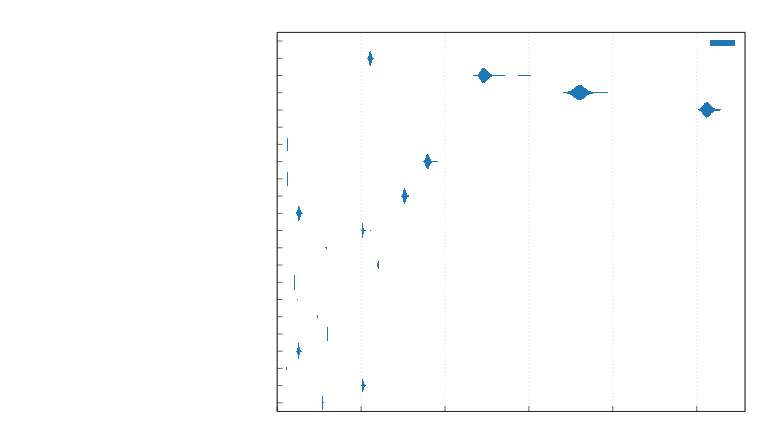

Speed Comparison

A comparison of merging three large log files running on Ubuntu 22 on WSL2.

- 2000 line log file, 1116357 bytes (≈1.1 MB), with high-plane unicode

- 2500 line log file, 1078842 bytes (≈1.0 MB), with high-plane unicode

- 5000 line log file, 2158138 bytes (≈2.1 MB), with high-plane unicode

Using hyperfine and GNU time to measure Max RSS.

| Command | Mean [ms] | Min [ms] | Max [ms] | Relative | Max RSS [KB] |

|---|---|---|---|---|---|

grep+sort |

41.0 ± 0.5 | 40.5 | 43.8 | 1.00 | 2740 |

s4 (system) |

37.3 ± 1.5 | 35.3 | 44.6 | 1.00 | 48084 |

s4 (mimalloc) |

30.3 ± 1.8 | 27.1 | 36.6 | 1.00 | 77020 |

s4 (jemalloc) |

36.0 ± 2.0 | 32.5 | 43.2 | 1.00 | 69028 |

logmerger |

720.2 ± 4.9 | 712.9 | 728.0 | 1.00 | 56332 |

Programs tested:

- GNU

grep3.7, GNUsort8.32 s40.7.75logmerger0.9.0 on Python 3.10.12tl1.5.0 on Python 3.10.12hyperfine1.11.0- GNU

time1.9

See directory results in compare-log-mergers.txt.

Benches

Function slice_contains_X_2_unroll is a custom implementation of slice.contains. It is used for string searching and specifically to bypass unnecessary regular expression matches.

According to the flamegraph, regular expression matches are very expensive.

slice_contains_X_2_unroll fares as well as memchr and stringzilla in some cases according to

this criterion benchmark report.

See the full 0.7.74 criterion benchmark report.

Building locally

See section Install super_speedy_syslog_searcher.

Parsing .journal files

Requires libsystemd to be installed to use libsystemd.so at runtime.

Requesting Support For DateTime Formats; your particular log file

If you have found a log file that Super Speedy Syslog Searcher does not parse then you may create a new Issue type Feature request (datetime format).

Here is an example user-submitted Issue.

"syslog" and other project definitions

syslog

In this project, the term "syslog" is used generously to refer to any log message that has a datetime stamp on the first line of log text.

Technically, "syslog" is defined among several RFCs proscribing fields, formats, lengths, and other technical constraints. In this project, the term "syslog" is interchanged with "log".

The term "sysline" refers to a one log message which may comprise multiple text lines.

See docs section Definitions of data for more project definitions.

log message

A "log message" is a single log entry for any type of logging scheme; an entry in a utmpx file, an entry in a systemd journal, an entry in a Windows Event Log, a formal RFC 5424 syslog message, or an ad-hoc log message.

logging chaos: the problem s4 solves

In practice, most log file formats are an ad-hoc format. And among formally defined log formats, there are many variations. The result is merging varying log messages by datetime is prohibitively tedious. If an engineer is investigating a problem that is symptomatic among many log files then the engineer must "hunt and peck" among those many log files. Log files can not be merged for a single coherent view.

The following real-world example log files are available in project directory

./logs.

open-source software examples

nginx webserver

For example, the open-source nginx web server

logs access attempts in an ad-hoc format in the file access.log

192.168.0.115 - - [08/Oct/2022:22:26:35 +0000] "GET /DOES-NOT-EXIST HTTP/1.1" 404 0 "-" "curl/7.76.1" "-"

which is an entirely dissimilar log format to the neighboring nginx log file,

error.log

2022/10/08 22:26:35 [error] 6068#6068: *3 open() "/usr/share/nginx/html/DOES-NOT-EXIST" failed (2: No such file or directory), client: 192.168.0.115, server: _, request: "GET /DOES-NOT-EXIST HTTP/1.0", host: "192.168.0.100"

nginx is following the bad example set by the apache web server.

Debian 11

Here are log snippets from a Debian 11 host.

file /var/log/alternatives.log

update-alternatives 2022-10-10 23:59:47: run with --quiet --remove rcp /usr/bin/ssh

file /var/log/dpkg.log

2022-10-10 15:15:02 upgrade gpgv:amd64 2.2.27-2 2.2.27-2+deb11u1

file /var/log/kern.log

Oct 10 23:07:16 debian11-b kernel: [ 0.10034] Linux version 5.10.0-11-amd64

file /var/log/unattended-upgrades/unattended-upgrades-shutdown.log

2022-10-10 23:07:16,775 WARNING - Unable to monitor PrepareForShutdown() signal, polling instead.

binary files

And then there are binary files, such as the wtmp file on Linux and other

Unix Operating Systems.

Using tool utmpdump, a utmp record structure is converted to text like:

[7] [12103] [ts/0] [user] [pts/0] [172.1.2.1] [172.1.2.2] [2023-03-05T23:12:36,270185+00:00]

And from a systemd .journal file, read using journalctl

Mar 03 10:26:10 host systemd[1]: Started OpenBSD Secure Shell server.

░░ Subject: A start job for unit ssh.service has finished successfully

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ A start job for unit ssh.service has finished successfully.

░░

░░ The job identifier is 120.

Mar 03 10:31:23 host sshd[4559]: Accepted login for user1 from 172.1.2.1 port 51730 ssh2

Try merging those two log messages by datetime using GNU grep, sort, sed,

or awk!

Additionally, if the wtmp file is from a different architecture

or Operating System, then the binary record structure is likely not parseable

by the resident utmpdump tool. What then!?

commercial software examples

Commercial software and computer hardware vendors nearly always use ad-hoc log message formatting that is even more unpredictable among each log file on the same system.

Synology DiskStation

Here are log file snippets from a Synology DiskStation host.

file DownloadStation.log

2019/06/23 21:13:34 (system) trigger DownloadStation 3.8.13-3519 Begin start-stop-status start

file sfdisk.log

2019-04-06T01:07:40-07:00 dsnet sfdisk: Device /dev/sdq change partition.

file synobackup.log

info 2018/02/24 02:30:04 SYSTEM: [Local][Backup Task Backup1] Backup task started.

(yes, those are tab characters)

Mac OS 12

Here are log file snippets from a Mac OS 12.6 host.

file /var/log/system

Oct 11 15:04:55 localhost syslogd[110]: Configuration Notice:

ASL Module "com.apple.cdscheduler" claims selected messages.

Those messages may not appear in standard system log files or in the ASL database.

file /var/log/wifi

Thu Sep 21 23:05:35.850 Usb Host Notification NOT activated

file /var/log/fsck_hs.log

/dev/rdisk2s2: fsck_hfs started at Thu Sep 21 21:31:05 2023

QUICKCHECK ONLY; FILESYSTEM CLEAN

file /var/log/anka.log

Fri Sep 22 00:06:05 UTC 2023: Checking /Library/Developer/CoreSimulator/Volumes/watchOS_20S75...

file /var/log/displaypolicyd.log

2023-09-15 04:26:56.330256-0700: Started at Fri Sep 15 04:26:56 2023

file /var/log/com.apple.xpc.launchd/launchd.log.1

2023-10-26 16:56:23.287770 <Notice>: swap enabled

file /var/log/asl/logs/aslmanager.20231026T170200+00

Oct 26 17:02:00: aslmanager starting

Did you also notice how the log file names differ in unexpected ways?

Microsoft Windows 10

Here are log snippets from a Windows 10 host.

file ${env:SystemRoot}\debug\mrt.log

Microsoft Windows Malicious Software Removal Tool v5.83, (build 5.83.13532.1)

Started On Thu Sep 10 10:08:35 2020

file ${env:SystemRoot}\comsetup.log

COM+[12:24:34]: ********************************************************************************

COM+[12:24:34]: Setup started - [DATE:05,27,2020 TIME: 12:24 pm]

file ${env:SystemRoot}\DirectX.log

11/01/19 20:03:40: infinst: Installed file C:\WINDOWS\system32\xactengine2_1.dll

file ${env:SystemRoot}/Microsoft.NET/Framework/v4.0.30319/ngen.log

09/15/2022 14:13:22.951 [515]: 1>Warning: System.IO.FileNotFoundException: Could not load file or assembly

file ${env:SystemRoot}/Performance/WinSAT/winsat.log

68902359 (21103) - exe\logging.cpp:0841: --- START 2022\5\17 14:26:09 PM ---

68902359 (21103) - exe\main.cpp:4363: WinSAT registry node is created or present

(yes, it reads hour 14, and PM… 🙄)

Summary

This chaotic logging approach is typical of commercial and open-source software, AND IT'S A MESS! Attempting to merge log messages by their natural sort mechanism, a datetime stamp, is difficult to impossible.

Hence the need for Super Speedy Syslog Searcher! 🦸

s4 merges varying log files into a single coherent datetime-sorted log.

Further Reading

Stargazers

Dependencies

~27–40MB

~721K SLoC