6 releases

| 0.5.2 | Nov 30, 2024 |

|---|---|

| 0.5.0 | Apr 24, 2024 |

| 0.3.2 | Sep 5, 2023 |

| 0.3.0 | Jul 11, 2023 |

| 0.2.0 | Mar 25, 2023 |

#932 in Concurrency

9,086 downloads per month

Used in 9 crates

(3 directly)

185KB

4K

SLoC

sea-streamer-redis: Redis Backend

This is the Redis backend implementation for SeaStreamer. This crate provides a high-level async API on top of Redis that makes working with Redis Streams fool-proof:

- Implements the familiar SeaStreamer abstract interface

- A comprehensive type system that guides/restricts you with the API

- High-level API, so you don't call

XADD,XREADorXACKanymore - Mutex-free implementation: concurrency achieved by message passing

- Pipelined

XADDand pagedXREAD, with a throughput in the realm of 100k messages per second

While we'd like to provide a Kafka-like client experience, there are some fundamental differences between Redis and Kafka:

- In Redis sequence numbers are not contiguous

- In Kafka sequence numbers are contiguous

- In Redis messages are dispatched to consumers among group members in a first-ask-first-served manner, which leads to the next point

- In Kafka consumer <-> shard is 1 to 1 in a consumer group

- In Redis

ACKhas to be done per message- In Kafka only 1 Ack (read-up-to) is needed for a series of reads

What's already implemented:

- RealTime mode with AutoStreamReset

- Resumable mode with auto-ack and/or auto-commit

- LoadBalanced mode with failover behaviour

- Seek/rewind to point in time

- Basic stream sharding: split a stream into multiple sub-streams

It's best to look through the tests for an illustration of the different streaming behaviour.

How SeaStreamer offers better concurrency?

Consider the following simple stream processor:

loop {

let input = XREAD.await;

let output = process(input).await;

XADD(output).await;

}

When it's reading or writing, it's not processing. So it's wasting time idle and reading messages with a higher delay, which in turn limits the throughput. In addition, the ideal batch size for reads may not be the ideal batch size for writes.

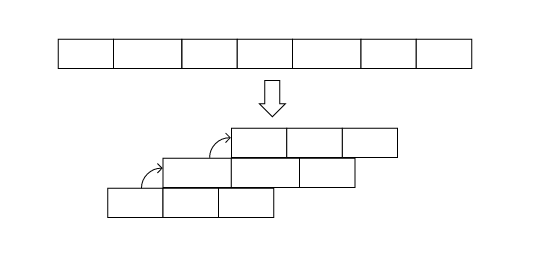

With SeaStreamer, the read and write loops are separated from your process loop, so they can all happen in parallel (async in Rust is multi-threaded, so it is truely parallel)!

If you are reading from a consumer group, you also have to consider when to ACK and how many ACKs to batch in one request. SeaStreamer can commit in the background on a regular interval, or you can commit asynchronously without blocking your process loop.

In the future, we'd like to support Redis Cluster, because sharding without clustering is not very useful. Right now it's pretty much a work-in-progress. It's quite a difficult task, because clients have to take responsibility when working with a cluster. In Redis, shards and nodes is a M-N mapping - shards can be moved among nodes at any time. It makes testing much more difficult. Let us know if you'd like to help!

You can quickly start a Redis instance via Docker:

docker run -d --rm --name redis -p 6379:6379 redis

There is also a small utility to dump Redis Streams messages into a SeaStreamer file.

This crate is built on top of redis.

Dependencies

~5–19MB

~282K SLoC