2 releases

Uses new Rust 2024

| 0.2.3 | Mar 10, 2025 |

|---|---|

| 0.2.2 | Mar 10, 2025 |

#399 in Machine learning

120KB

120 lines

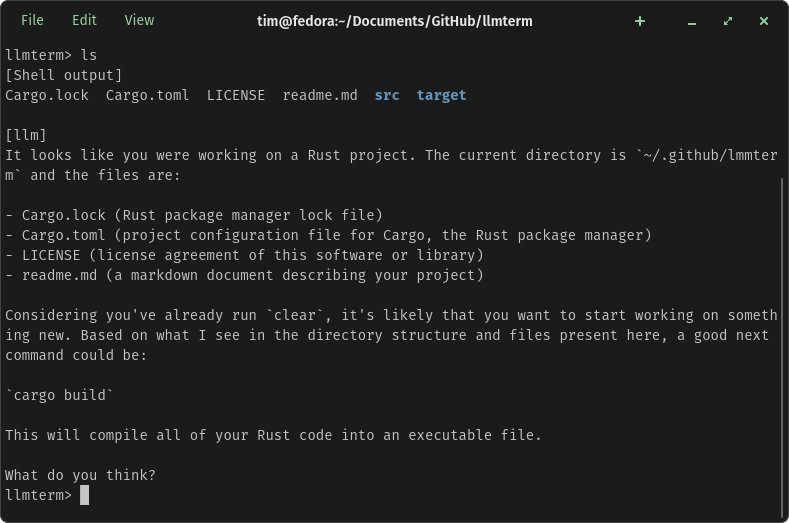

llmterm offers suggestions based on your shell usage

cuda

The kalosm crate is used to interface with the LLM. Cuda support is enabled by default. To choose other inference methods, edit the Cargo.toml file and rebuild.

to build

cargo build --release

to run

cargo run --release

to install

cargo install llmterm

models

cargo run --release -- --model llama_3_1_8b_chat

- llama_3_1_8b_chat

- mistral_7b_instruct_2

- phi_3_5_mini_4k_instruct

to exit

Use Ctrl-C, or type exit or quit.

todo

easy on ramp

- command line switch for different shells

- command line switch to suggest only a command

- check if llm response is empty, if so, pop the last activity off the buffer and try again

- allow loading of all local models supported by kalosm by name

more challenging

- support remote LLMs via API: https://github.com/floneum/floneum/tree/main/interfaces/kalosm

- tab completion

- mode to suggest only a tab completion w/ dimmer color

- mode to interact with the llm instead of the shell, and approve / disapprove llm generated commands

- tui

Dependencies

~66–105MB

~2M SLoC