2 unstable releases

| 0.10.0-dev |

|

|---|---|

| 0.2.0 | Jul 2, 2024 |

| 0.1.1 |

|

| 0.1.0 | Apr 20, 2024 |

#23 in #event-processing

66 downloads per month

Used in arroyo-udf-plugin

49KB

898 lines

Arroyo Cloud | Getting started | Docs | Discord | Website

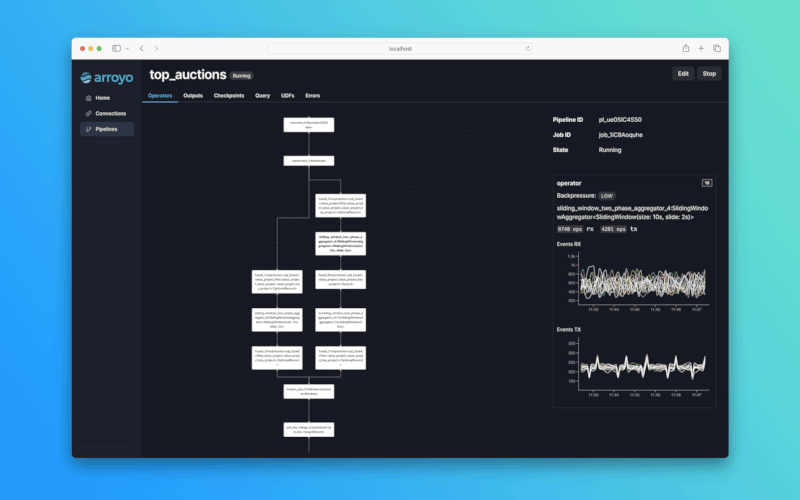

Arroyo is a distributed stream processing engine written in Rust, designed to efficiently perform stateful computations on streams of data. Unlike traditional batch processing, streaming engines can operate on both bounded and unbounded sources, emitting results as soon as they are available.

In short: Arroyo lets you ask complex questions of high-volume real-time data with subsecond results.

Features

🦀 SQL and Rust pipelines

🚀 Scales up to millions of events per second

🪟 Stateful operations like windows and joins

🔥State checkpointing for fault-tolerance and recovery of pipelines

🕒 Timely stream processing via the Dataflow model

Use cases

Some example use cases include:

- Detecting fraud and security incidents

- Real-time product and business analytics

- Real-time ingestion into your data warehouse or data lake

- Real-time ML feature generation

Why Arroyo

There are already a number of existing streaming engines out there, including Apache Flink, Spark Streaming, and Kafka Streams. Why create a new one?

- Serverless operations: Arroyo pipelines are designed to run in modern cloud environments, supporting seamless scaling, recovery, and rescheduling

- High performance SQL: SQL is a first-class concern, with consistently excellent performance

- Designed for non-experts: Arroyo cleanly separates the pipeline APIs from its internal implementation. You don't need to be a streaming expert to build real-time data pipelines.

Getting Started

You can get started with a single node Arroyo cluster by running the following docker command:

$ docker run -p 8000:8000 ghcr.io/arroyosystems/arroyo-single:latest

or if you have Cargo installed, you can use the arroyo cli:

$ cargo install arroyo

$ arroyo start

Then, load the Web UI at http://localhost:8000.

For a more in-depth guide, see the getting started guide.

Once you have Arroyo running, follow the tutorial to create your first real-time pipeline.

Developing Arroyo

We love contributions from the community! See the developer setup guide to get started, and reach out to the team on discord or create an issue.

Community

- Discord — support and project discussion

- GitHub issues — bugs and feature requests

- Arroyo Blog — updates from the Arroyo team

Arroyo Cloud

Don't want to self-host? Arroyo Systems provides fully-managed cloud hosting for Arroyo. Sign up here.

Dependencies

~16–25MB

~348K SLoC