12 releases (5 breaking)

Uses new Rust 2024

| 0.6.0 | Apr 17, 2025 |

|---|---|

| 0.5.5 | Apr 15, 2025 |

| 0.5.4 | Mar 29, 2025 |

| 0.4.0 | Mar 6, 2025 |

| 0.1.0 | Feb 22, 2025 |

#1852 in Command line utilities

321 downloads per month

31KB

371 lines

oneiromancer

"A large fraction of the flaws in software development are due to programmers not fully understanding all the possible states their code may execute in." -- John Carmack

"Can it run Doom?" -- https://canitrundoom.org/

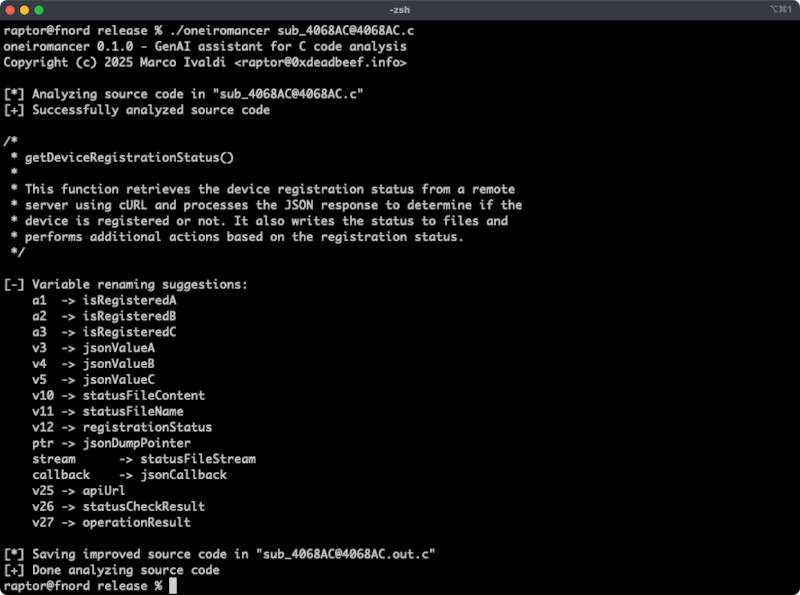

Oneiromancer is a reverse engineering assistant that uses a locally running LLM that has been fine-tuned for Hex-Rays pseudo-code to aid with code analysis. It can analyze a function or a smaller code snippet, returning a high-level description of what the code does, a recommended name for the function, and variable renaming suggestions, based on the results of the analysis.

Features

- Cross-platform support for the fine-tuned LLM aidapal based on

mistral-7b-instruct. - Easy integration with the pseudo-code extractor haruspex and popular IDEs.

- Code description, recommended function name, and variable renaming suggestions are printed on the terminal.

- Improved pseudo-code of each analyzed function is saved in a separate file for easy inspection.

- External crates can invoke

analyze_codeoranalyze_fileto analyze pseudo-code and then process analysis results.

Blog post

See also

- https://www.atredis.com/blog/2024/6/3/how-to-train-your-large-language-model

- https://huggingface.co/AverageBusinessUser/aidapal

- https://github.com/atredispartners/aidapal

- https://plugins.hex-rays.com/atredispartners/aidapal

Installing

The easiest way to get the latest release is via crates.io:

cargo install oneiromancer

To install as a library, run the following command in your project directory:

cargo add oneiromancer

Compiling

Alternatively, you can build from source:

git clone https://github.com/0xdea/oneiromancer

cd oneiromancer

cargo build --release

Configuration

- Download and install Ollama.

- Download the fine-tuned weights and the Ollama modelfile from Hugging Face:

wget https://huggingface.co/AverageBusinessUser/aidapal/resolve/main/aidapal-8k.Q4_K_M.gguf wget https://huggingface.co/AverageBusinessUser/aidapal/resolve/main/aidapal.modelfile - Configure Ollama by running the following commands within the directory in which you downloaded the files:

ollama create aidapal -f aidapal.modelfile ollama list

Usage

- Run oneiromancer as follows:

export OLLAMA_BASEURL=custom_baseurl # if not set, the default will be used export OLLAMA_MODEL=custom_model # if not set, the default will be used oneiromancer <target_file>.c - Find the extracted pseudo-code of each decompiled function in

<target_file>.out.c:vim <target_file>.out.c code <target_file>.out.c

Note: for best results, you shouldn't submit for analysis to the LLM more than one function at a time.

Tested on

- Apple macOS Sequoia 15.2 with Ollama 0.5.11

- Ubuntu Linux 24.04.2 LTS with Ollama 0.5.11

- Microsoft Windows 11 23H2 with Ollama 0.5.11

Changelog

Credits

- Chris Bellows (@AverageBusinessUser) at Atredis Partners for his fine-tuned LLM

aidapal<3

TODO

- Improve output file handling with versioning and/or an output directory.

- Implement other features of the IDAPython

aidapalIDA Pro plugin (e.g., context). - Integrate with haruspex and idalib.

- Use custom types in the public API and implement a provider abstraction.

- Implement a "minority report" protocol (i.e., make three queries and select the best responses).

- Investigate other use cases for the

aidapalLLM and implement a modular architecture to plug in custom LLMs.

Dependencies

~19–30MB

~528K SLoC