5 releases

Uses new Rust 2024

| new 0.1.4 | May 4, 2025 |

|---|---|

| 0.1.3 | Mar 31, 2025 |

| 0.1.2 | Mar 23, 2025 |

| 0.1.1 | Mar 23, 2025 |

| 0.1.0 | Mar 23, 2025 |

#42 in Caching

87 downloads per month

Used in 3 crates

25KB

425 lines

LiquidCache is an object store cache designed for DataFusion based systems.

Integrating LiquidCache by simply adding an optimizer rule, it automatically does the rest and saves you up to 10x cost and latency.

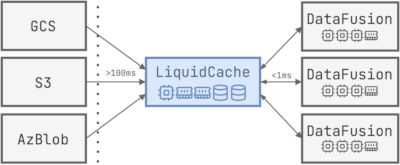

Architecture

Both LiquidCache and DataFusion run on cloud servers within the same region, but are configured differently:

- LiquidCache often has a memory/CPU ratio of 16:1 (e.g., 64GB memory and 4 cores)

- DataFusion often has a memory/CPU ratio of 2:1 (e.g., 32GB memory and 16 cores)

Multiple DataFusion nodes share the same LiquidCache instance through network connections. Each component can be scaled independently as the workload grows.

Under the hood, LiquidCache transcodes and caches Parquet data from object storage, and evaluates filters before sending data to DataFusion, effectively reducing both CPU utilization and network data transfer on cache servers.

Integrate LiquidCache in 5 Minutes

Check out the examples folder for more details.

1. Include the dependencies:

In your existing DataFusion project:

[dependencies]

liquid-cache-client = "0.1.0"

2. Start a Cache Server:

#[tokio::main]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

let liquid_cache = LiquidCacheService::new(

SessionContext::new(),

Some(1024 * 1024 * 1024), // max memory cache size 1GB

Some(tempfile::tempdir()?.into_path()), // disk cache dir

);

let flight = FlightServiceServer::new(liquid_cache);

Server::builder()

.add_service(flight)

.serve("0.0.0.0:50051".parse()?)

.await?;

Ok(())

}

Or use our pre-built docker image:

docker run -p 50051:50051 -v ~/liquid_cache:/cache \

ghcr.io/xiangpenghao/liquid-cache/liquid-cache-server:latest \

/app/bench_server \

--address 0.0.0.0:50051 \

--disk-cache-dir /cache

3. Setup client:

#[tokio::main]

pub async fn main() -> Result<()> {

//=======================Setup LiquidCache context=======================

let ctx = LiquidCacheBuilder::new(cache_server)

.with_object_store(ObjectStoreUrl::parse(object_store_url.as_str())?, None)

.with_cache_mode(CacheMode::Liquid)

.build(SessionConfig::from_env()?)?;

//=======================================================================

let ctx: Arc<SessionContext> = Arc::new(ctx);

ctx.register_table(table_name, ...)

.await?;

ctx.sql(&sql).await?.show().await?;

Ok(())

}

Community server

We run a community server for LiquidCache at https://hex.tail0766e4.ts.net:50051 (hosted on Xiangpeng's NAS, use at your own risk).

You can try it out by running:

cargo run --bin example_client --release -- \

--cache-server https://hex.tail0766e4.ts.net:50051 \

--file "https://huggingface.co/datasets/HuggingFaceFW/fineweb/resolve/main/data/CC-MAIN-2024-51/000_00042.parquet" \

--query "SELECT COUNT(*) FROM \"000_00042\" WHERE \"token_count\" < 100"

Expected output (within a second):

+----------+

| count(*) |

+----------+

| 44805 |

+----------+

Run ClickBench

1. Setup the Repository

git clone https://github.com/XiangpengHao/liquid-cache.git

cd liquid-cache

2. Run a LiquidCache Server

cargo run --bin bench_server --release

3. Run a ClickBench Client

In a different terminal, run the ClickBench client:

cargo run --bin clickbench_client --release -- --query-path benchmark/clickbench/queries.sql --file examples/nano_hits.parquet

(Note: replace nano_hits.parquet with the real ClickBench dataset for full benchmarking)

Development

See dev/README.md

Benchmark

FAQ

Can I use LiquidCache in production today?

Not yet. While production readiness is our goal, we are still implementing features and polishing the system. LiquidCache began as a research project exploring new approaches to build cost-effective caching systems. Like most research projects, it takes time to mature, and we welcome your help!

Does LiquidCache cache data or results?

LiquidCache is a data cache. It caches logically equivalent but physically different data from object storage.

LiquidCache does not cache query results - it only caches data, allowing the same cache to be used for different queries.

Nightly Rust, seriously?

We will transition to stable Rust once we believe the project is ready for production.

How does LiquidCache work?

Check out our paper (under submission to VLDB) for more details. Meanwhile, we are working on a technical blog to introduce LiquidCache in a more accessible way.

How can I get involved?

We are always looking for contributors! Any feedback or improvements are welcome. Feel free to explore the issue list and contribute to the project. If you want to get involved in the research process, feel free to reach out.

Who is behind LiquidCache?

LiquidCache is a research project funded by:

- InfluxData

- Taxpayers of the state of Wisconsin and the federal government.

As such, LiquidCache is and will always be open source and free to use.

Your support for science is greatly appreciated!

License

Dependencies

~21–30MB

~444K SLoC